State of Cloud Native 2025: What the most influential industry reports reveal

At Cuemby, as active members of the cloud-native community and the CNCF ecosystem, we treat these reports as working tools for the industry. They give us room to step back from day-to-day urgency, look at the full map, and locate our own reality within that picture. This article offers a deep reading of that evidence: it organizes the data, highlights the trends that are actually reshaping the landscape, and introduces questions that can elevate the technical discussions inside your organization. The invitation is to move through it at your own pace, mark what resonates with your context, and use these findings as raw material for better decisions—especially in emerging markets where every technology choice carries very concrete operational and financial consequences.

Every year, the “science” of the cloud-native industry gives us a pretty good X-ray of where the world is heading: which technologies are consolidating, which practices are stuck in proof-of-concept limbo, and where efficiency and competitiveness are really being decided.

In 2025, that X-ray comes mainly from three sources:

- State of Cloud Native Development Q3 2025 (CNCF + SlashData)

- A set of complementary studies:

The easy reaction is to treat these as “interesting, but distant” — something that only matters to large global players. This article aims for the opposite: to extract from those datasets ideas that any organization can use, especially in emerging markets, to understand:

- what is actually happening in cloud native,

- what’s coming at the intersection of AI, data, and cost,

- and which questions are worth asking internally right now.

No product pitch. No sales narrative. Just technical interpretation, grounded in evidence.

1. The 2025 map of cloud-native development

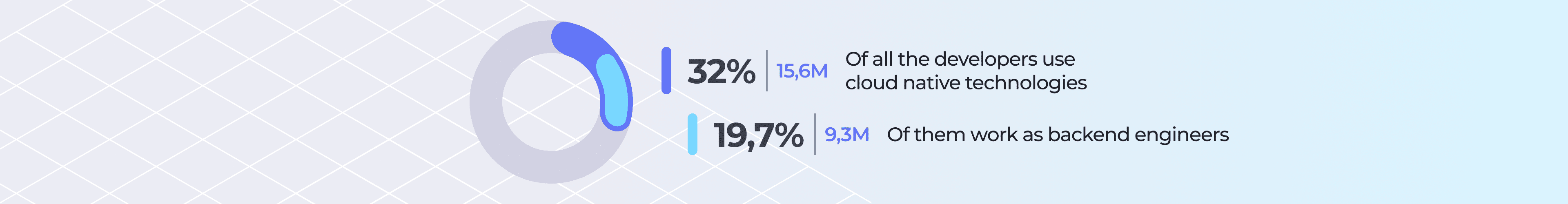

The first big number from the CNCF report is about scale: 15.6 million developers are using cloud-native technologies worldwide, roughly 32% of all developers. Around 9.3 million of them work in backend.

(Report: State of Cloud Native Development Q3 2025)

Among backend developers, the shift is clear:

- 56% of backend developers are “cloud native” by Q3 2025, up from 49% just six months earlier.

- 77% of backend developers use at least one cloud-native technology (containers, Kubernetes, observability, serverless, etc.).

(Same report: State of Cloud Native Development Q3 2025)

In other words, “cloud native” stopped being a niche world of SREs and K8s operators and has become the default context for a majority of backend developers.

The report also breaks down what backend folks actually use:

- 50% use API gateways.

- 46% work with microservices.

- 30% use Kubernetes directly (down from a 36% peak in 2023).

- More advanced practices like immutable infrastructure or chaos engineering show up in only 7% and 6% of backend developers, respectively.

(State of Cloud Native Development Q3 2025)

That already suggests something important for readers in emerging markets:

Large parts of the world are running on top of the outcomes of advanced practices

(microservices, gateways, observability)

while relatively few teams have fully absorbed deep resilience and heavy automation as everyday discipline.

The race is not “over”. It’s entering a different phase.

2. From “going to the cloud” to deciding how to be in the cloud

Across reports, a common thread appears — one that many teams on the ground will recognize. The discussion has moved away from “cloud: yes or no?” and toward “which mix of public cloud, on-prem, and edge actually makes sense for our applications and regulations?”

In CNCF/SlashData’s numbers:

- Across all developers, use of hybrid cloud rises from 22% in 2021 to roughly 30–32% in 2025.

- Multi-cloud reaches 23–26%, depending on whether you look at backend vs. the full developer population.

- Distributed cloud starts to show up meaningfully in backend: 15% of backend developers say they use it in Q3 2025, up from 12% at the start of the year.

(State of Cloud Native Development Q3 2025)

CNCF’s coverage and commentary around the report point in the same direction: hybrid and multi-cloud are increasingly structural strategies, and distributed cloud is emerging where teams need to run workloads close to users and data sources.

(Based on State of Cloud Native Development Q3 2025)

For emerging markets, that shift matters because:

- data regulation, latency, and network cost/quality are often tougher constraints than in North America or Western Europe,

- and copying architectures designed for companies with three continent-scale data centers can become very expensive, with less payoff.

The takeaway is not that “everyone must go hybrid or multi-cloud.” It’s something more nuanced:

The global standard is moving toward more distributed architectures;

each region and market will need to design its own pragmatic version of that standard.

3. AI and cloud native: from curiosity to mutual dependency

One number in the CNCF report tends to surprise people: only 41% of professional AI/ML developers classify as “cloud native”.

(State of Cloud Native Development Q3 2025)

The authors’ explanation is revealing: a large portion of these teams rely on managed ML services (MLaaS) that hide infrastructure details and reduce the incentive to learn cloud-native patterns deeply. At the same time, they estimate about 7.1 million AI/ML developers who are cloud native, many of them overlapping with the backend universe.

(Same source: State of Cloud Native Development Q3 2025)

Meanwhile, the CNCF Tech Radar Q3 2025 shows the other side of the story:

- Inference tools like NVIDIA Triton, DeepSpeed, TensorFlow Serving, and BentoML land in the “Adopt” ring, scoring high in usefulness and maturity.

- In ML orchestration, technologies like Apache Airflow are recommended for adoption.

- In “agentic” AI platforms, concepts like Model Context Protocol (MCP) and open model stacks appear in the adoption zone.

(CNCF Tech Radar – Q3 2025)

The implicit message:

- Cloud-native infrastructure is becoming the minimum viable base for running AI in production with real guarantees.

- AI, in turn, is pushing infrastructure complexity outward: edge, real-time data, new monitoring and security patterns.

For teams in emerging markets, the practical questions aren’t “Triton or vLLM?” The questions are closer to:

- How are we going to version, deploy, and monitor AI models with the same rigor we use for microservices?

- Who on the team understands Kubernetes, data, and ML at the same time, and what skill gaps do we need to close?

4. Kubernetes in production: maturity with a cost hangover

Numbers from the State of Production Kubernetes 2025 make one point very clear: Kubernetes is no longer an experiment.

For the organizations surveyed (all with 250+ employees):

- Kubernetes is treated as mission-critical infrastructure.

- 90% expect their AI workloads on K8s to grow in the next 12 months.

- Multi-environment operations are the norm: a “typical adopter” runs clusters in five or more environments (three major hyperscalers, on-prem, GPU or sovereign clouds).

- Cost is the leading pain point: 42% name it as their top challenge; 88% report year-over-year increases in Kubernetes TCO; 92% are investing in optimization tools, many of them AI-driven.

- Edge computing with Kubernetes is mainstream: 50% run K8s at the edge in production.

The Data on Kubernetes 2025 Report shows that “DoK” (data on Kubernetes) has moved beyond experimentation into an operational excellence phase:

- Nearly half of organizations run 50% or more of their data workloads in production on Kubernetes.

- 62% attribute 11% or more of their revenue to data workloads on Kubernetes.

- Operational priorities have shifted away from “can we do this?” to:

- performance optimization (46%),

- security and compliance (42%),

- skills and talent gaps (40%).

- The top priority for 2025 is cost optimization, followed very closely by improving AI/ML capabilities on Kubernetes.

- The workloads growing fastest:

- Databases (66%) remain the #1 workload.

- Analytics (57%) and AI/ML (44%) cement Kubernetes as a data platform, not just a microservices platform.

- Vector databases show up as critical infrastructure: roughly 80% already use or plan to use them, and 77% see them as essential for RAG and AI applications.

On the versioning and security side, Datadog Security Labs reports real-world progress: as of October 2025, 78% of observed hosts were running Kubernetes versions in mainstream support, 19% in extended support, and just 3% on unsupported versions, helped by distros offering LTS support.

(Article: A 2025 look at real-world Kubernetes version adoption)

Taken together, these studies suggest a central thesis:

Kubernetes and the cloud-native ecosystem sit at the core of data and AI workloads today, and the maturity challenge has shifted from “getting it into production” to operating it efficiently, securely, and economically.

5. Observability: when downtime has a price tag

New Relic’s reports put dollar figures on something most teams feel but rarely quantify: the real cost of “the system is down.”

(2025 Observability Forecast – New Relic)

From the 2025 Observability Forecast:

- A high-business-impact outage has a median cost of $2 million per hour, and the annual median cost of such incidents is $76 million for surveyed organizations.

(These numbers also appear in New Relic’s press release on BusinessWire: New Relic Study Reveals Businesses Face an Annual Median Cost of $76 Million from High-Impact IT Outages)

- Organizations with full-stack observability manage to cut those outage costs roughly in half (from $2M to about $1M per hour) and report fewer incidents plus faster detection.

In the Retail & eCommerce slice:

- The median cost of a critical outage is $1 million per hour, about half the cross-industry figure.

- Nearly one in three retailers experiences critical outages weekly.

- 46% report a 2x or higher ROI on observability investments.

- Companies are reducing tool sprawl: average observability tools drop from 5.9 in 2022 to 3.9 in 2025, and the share using a single platform rises from 3% to 12%.

(Report: State of Observability for Retail 2025)

The link to cloud native is straightforward:

- As services, clusters, and regions multiply, the risk of invisible fires grows.

- The data shows that consolidating observability and pushing it to full-stack isn’t a “nice to have” architecture goal — it’s a concrete way to reduce financial loss.

In emerging markets, where IT budgets are often tighter, it’s worth flipping the usual framing:

How much of what we label “expensive” in observability or SRE

is actually just insurance for systems that already carry a large chunk of the business?

6. FinOps and the cost of AI: the cloud won’t manage itself

The State of FinOps 2025 adds an economic layer to all of this.

From its public summary and coverage:

- The survey represents organizations managing more than $69 billion in cloud spend.

- 31% of respondents spend over $50 million per year on cloud services alone.

- 97% invest across multiple infrastructure areas (public cloud, private cloud, SaaS, data, etc.).

- 63% already treat AI spend as part of their FinOps practice, up from 31% the year before.

- FinOps teams typically handle a dozen or more capabilities (optimization, forecasting, reporting, SaaS, licensing, private cloud, data centers, and more).

In parallel, the Data on Kubernetes 2025 report shows that cost optimization is the #1 priority for organizations running data and AI on Kubernetes, especially around storage and data transfer.

(Data on Kubernetes 2025 Report)

And the State of Production Kubernetes 2025 quantifies that 88% of teams report year-over-year TCO increases for Kubernetes, while 92% are investing in AI-powered optimization tools.

(State of Production Kubernetes 2025)

Together, these signals emphasize something worth keeping in mind whenever you read a cloud-native report:

- The conversation is no longer only architectural (“microservices or monolith?”).

- It’s also economic and governance-driven (“who actually sees AI, storage, and edge spend end-to-end?”).

In emerging markets — where budget elasticity is limited — this becomes more than a trend:

The AI wave rides on data and cloud native,

but without cost discipline, it quickly turns into a snowball that’s hard to slow down.

7. What all of this implies for emerging markets

Global reports don’t always break things down by region, yet they highlight patterns that resonate strongly in emerging markets:

- Skill gaps at the intersection of Kubernetes, data, and AI

- The DoK report flags the lack of combined skills in Kubernetes, data workloads, performance, and cost management as a top challenge for 40% of organizations.

(Data on Kubernetes 2025 Report)

- In many emerging markets, this is amplified by wage differentials, fewer senior profiles, and remote talent leaving for global employers.

- Distributed systems in uneven connectivity environments

- The global push toward hybrid, multi-cloud, and edge assumes relatively reliable networks.

(State of Cloud Native Development Q3 2025, State of Production Kubernetes 2025)

- A lot of organizations in emerging markets design for spotty connectivity, expensive links, and local data centers with varying capabilities.

- Real business impact, even for mid-sized organizations

- If global retailers report $1M/hour outages and other sectors $2M/hour, you don’t need to replicate those numbers to understand the ratio: an hour-long outage in payments, logistics, or healthcare can be existential for a mid-sized business in an emerging market.

(2025 Observability Forecast, State of Observability for Retail 2025)

- A chance to “skip stages”

- Many of the issues in these reports — tool sprawl, rushed repatriation, hard-to-manage “snowflake” clusters — come from years of decisions made in an era of cheap capital.

(State of Production Kubernetes 2025, 2025 Observability Forecast, State of FinOps 2025)

- Constraints in emerging markets create an opening: design platforms and practices with frugality in mind from day one, learning from mistakes already documented elsewhere.

From that angle, the most useful question for teams outside the traditional tech hubs might not be “are we behind?” but:

- Which parts of these learnings can we adopt selectively to gain resilience and efficiency?

- Where do we want to be on the “front edge,” and where are we better off as smart fast followers?

8. How to read these numbers inside your organization

Using the State of Cloud Native Development as the primary lens and the other reports as context, you can build a simple internal checklist for any technical or leadership team:

- Where are we on the cloud-native maturity curve?

- Do we use containers, API gateways, observability, and microservices consistently?

- Are we closer to the 56% of backend developers who are already cloud native… or do we still operate as if infrastructure were mostly static?

(State of Cloud Native Development Q3 2025)

- How distributed is our infrastructure in practice?

- Do we know when it makes sense to stay on-prem, when to go public cloud, when we need sovereign cloud, and when edge is justified?

- Is there a deliberate hybrid/multi-cloud strategy, or just an accumulation of past decisions?

(State of Cloud Native Development Q3 2025, State of Production Kubernetes 2025)

- AI: black box service, or something we can actually operate?

- Are we fully dependent on MLaaS with little visibility into performance and cost?

(State of Cloud Native Development Q3 2025, CNCF Tech Radar – Q3 2025)

- Who in the organization has a working grasp of models, data, Kubernetes, security, and cost all at once?

- What is an hour of downtime worth in our context?

- Even if your scale is smaller than the New Relic case studies, do you have an internal estimate?

(2025 Observability Forecast)

- Are investments in observability, SRE, and resilience framed against that number, or just against a vague sense of “this tool feels expensive”?

- Who watches the costs, and with what visibility?

- Do we have any form of FinOps practice — even lightweight — that covers AI, SaaS, and data centers, not just IaaS?

(State of FinOps 2025)

- Can we distinguish between spend that reflects necessary capacity and spend that comes from inefficiencies (idling clusters, poor storage design, unnecessary data transfer)?

These questions don’t replace architectural work. They frame it. They help you read “56% adopted X” or “30% use Y” without losing sight of what actually matters inside your own org.

9. Where the conversation is heading (and what comes next)

Putting the pieces together, the pattern for 2025 looks remarkably consistent:

- Cloud native is mainstream in backend and expanding into AI/ML, data, and edge.

(State of Cloud Native Development Q3 2025, Data on Kubernetes 2025 Report, State of Production Kubernetes 2025)

- Hybrid, multi-cloud, and distributed paradigms are becoming the default reality, not an exception.

(State of Cloud Native Development Q3 2025, State of Production Kubernetes 2025)

- Kubernetes is less of an end goal and more of a means to run data, AI, and core business workloads at scale.

(Data on Kubernetes 2025 Report, State of Production Kubernetes 2025, A 2025 look at real-world Kubernetes version adoption)

- The conversation is shifting from “adoption” to “operational excellence”: performance, observability, security, and cost.

(Data on Kubernetes 2025 Report, 2025 Observability Forecast, State of FinOps 2025)

- FinOps is broadening to cover AI, SaaS, and other flexible tech spend categories.

(State of FinOps 2025)

For emerging markets, this opens at least three lines of inquiry for future articles or internal studies:

- How does this level of maturity translate to organizations that don’t have the same financial muscle, but do face growing pressure to integrate AI and modernize their stack?

- What training, community, and standardization strategies can help close the global skill gaps that reports already highlight — gaps that are more acute in many emerging economies?

(Data on Kubernetes 2025 Report, State of Cloud Native Development Q3 2025)

- How can we design platforms and practices that bake in observability, security, and FinOps from day one, instead of bolting them on later once TCO becomes unmanageable?

Let’s keep the conversation going

This article starts from a shared need: making better technical decisions in a world where cloud native, data, and AI move fast and the margin for trial and error is small.

At Cuemby, we’ve spent years working with organizations and communities wrestling with the same questions that run through this piece: how to run cloud-native practices in production, how to design platforms that actually support the business, how to bring AI into the stack while staying on top of security, observability, and cost. That experience taught us that industry reports become truly useful when they’re translated into concrete contexts and turned into conversations inside real teams.

If your organization, technical community, or educational initiative is exploring these topics and wants to go deeper on any of the areas we touched on—cloud native, production Kubernetes, data, AI, observability, FinOps, or internal platforms—our team is available to connect and share perspective.

- You can schedule a meeting with our team here:

https://app.lemcal.com/@cuemby/

- Or reach out directly by email:

elsa@cuemby.com

The invitation is straightforward: take these findings as a starting point and, if it’s useful for you, open up spaces for dialogue where we can compare realities across emerging markets and help build more sustainable paths for adopting cloud-native and AI technologies.

.svg)

.png)

.svg)